From the published article:

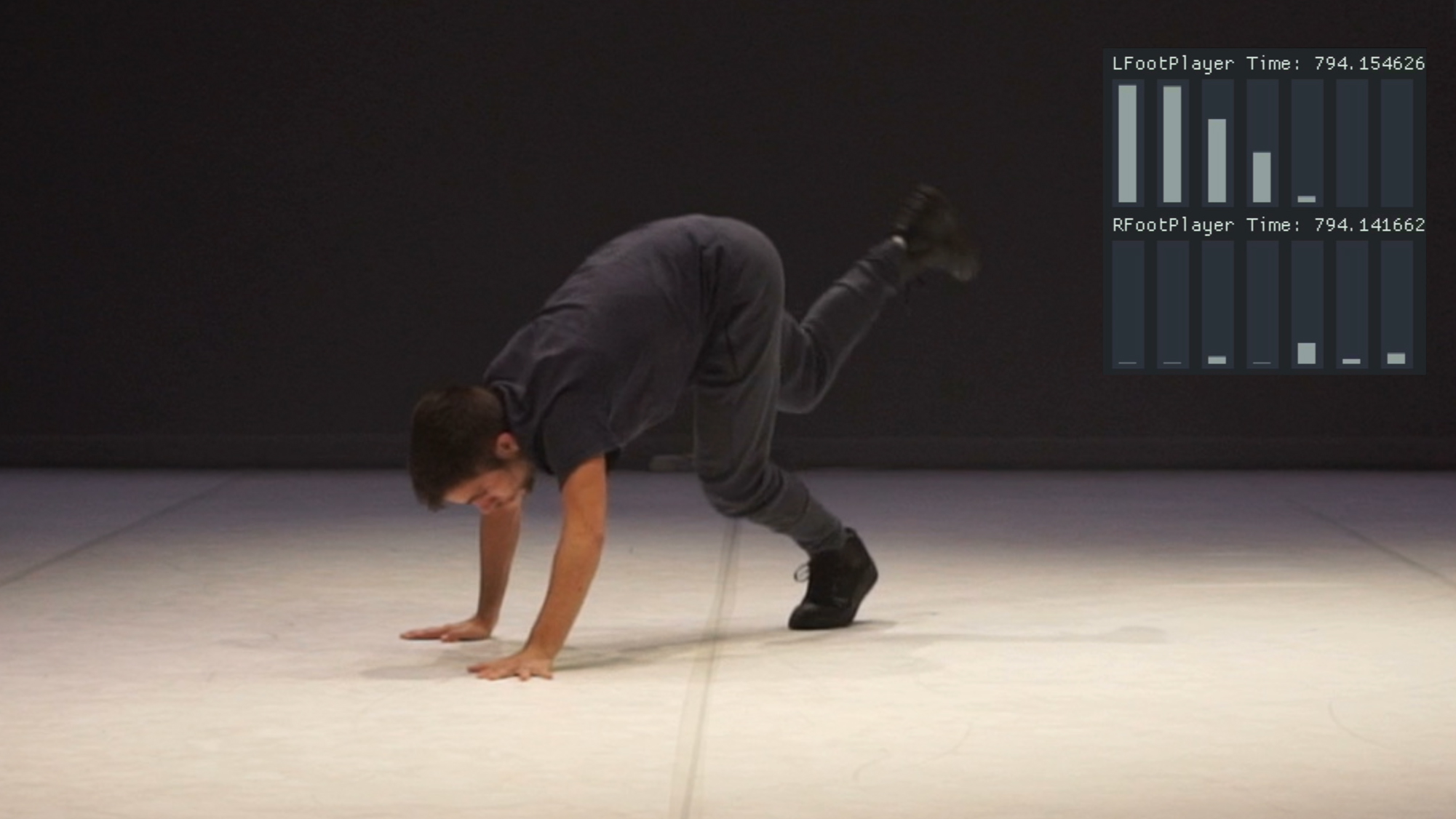

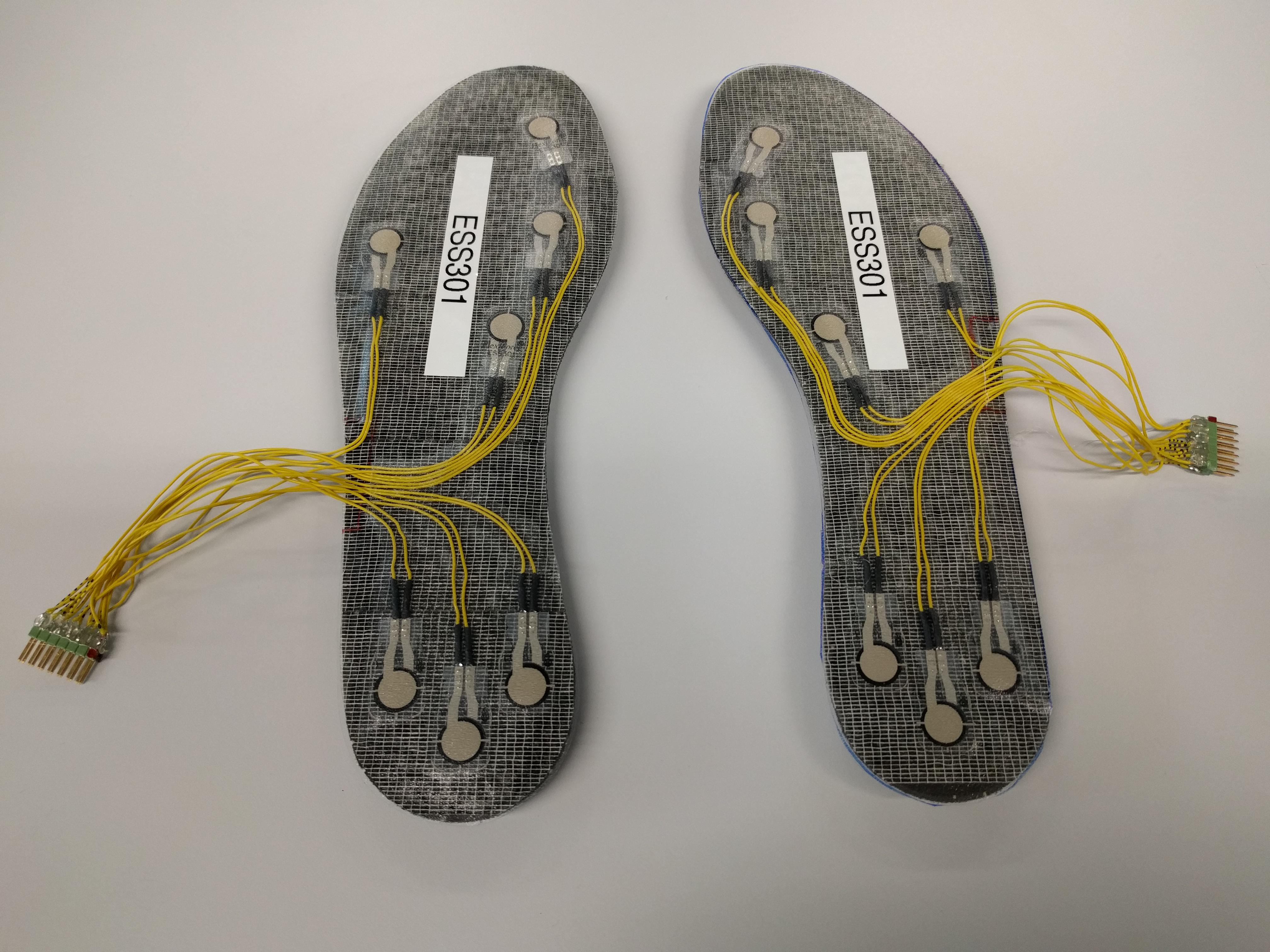

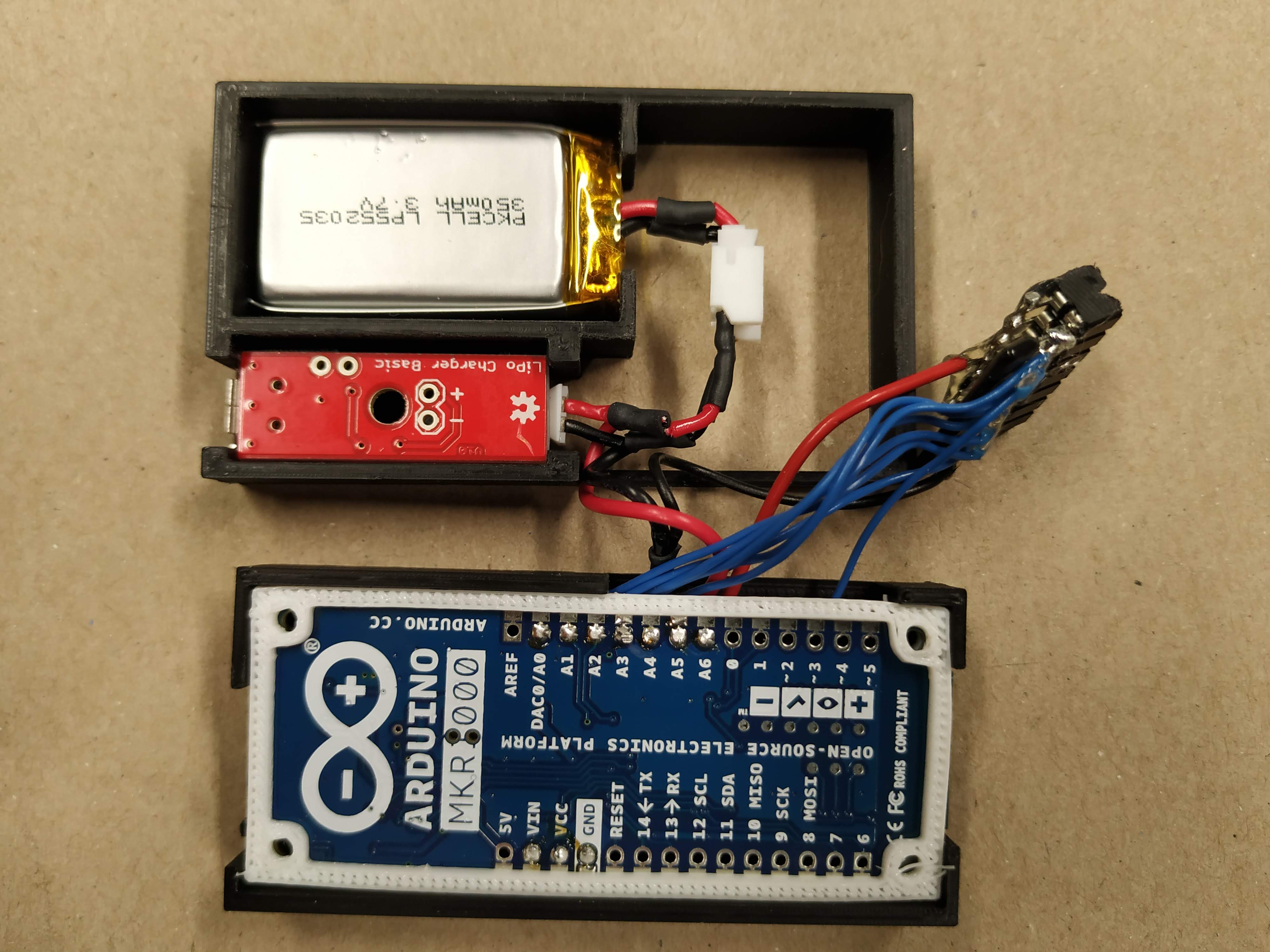

The project Sounding Feet explores how small postural changes can be used to control music. From an artistic point of view, such an interactive relationship is interesting since it links the musical outcome of interaction to the proprioceptive awareness of a dancer and it exposes to an audience through the auditory modality a dancer’s minute movements that might be visually hidden. The project follows an approach that combines musical ideation, dance improvisation, interaction design, and engineering. Through this combination the development and design decisions (e.g. the characteristics, number and position of force resistive sensors) can be informed by artistic criteria.

Sonification of sensor data is based on two models for vocal sound synthesis that have been developed in the Supercollider programming language. One model employs additive sound synthesis, the other subtractive sound synthesis, and both models are combined with wave shaping techniques. These vocal sound synthesis models have been chosen since they can produce a wide range of sonic results.

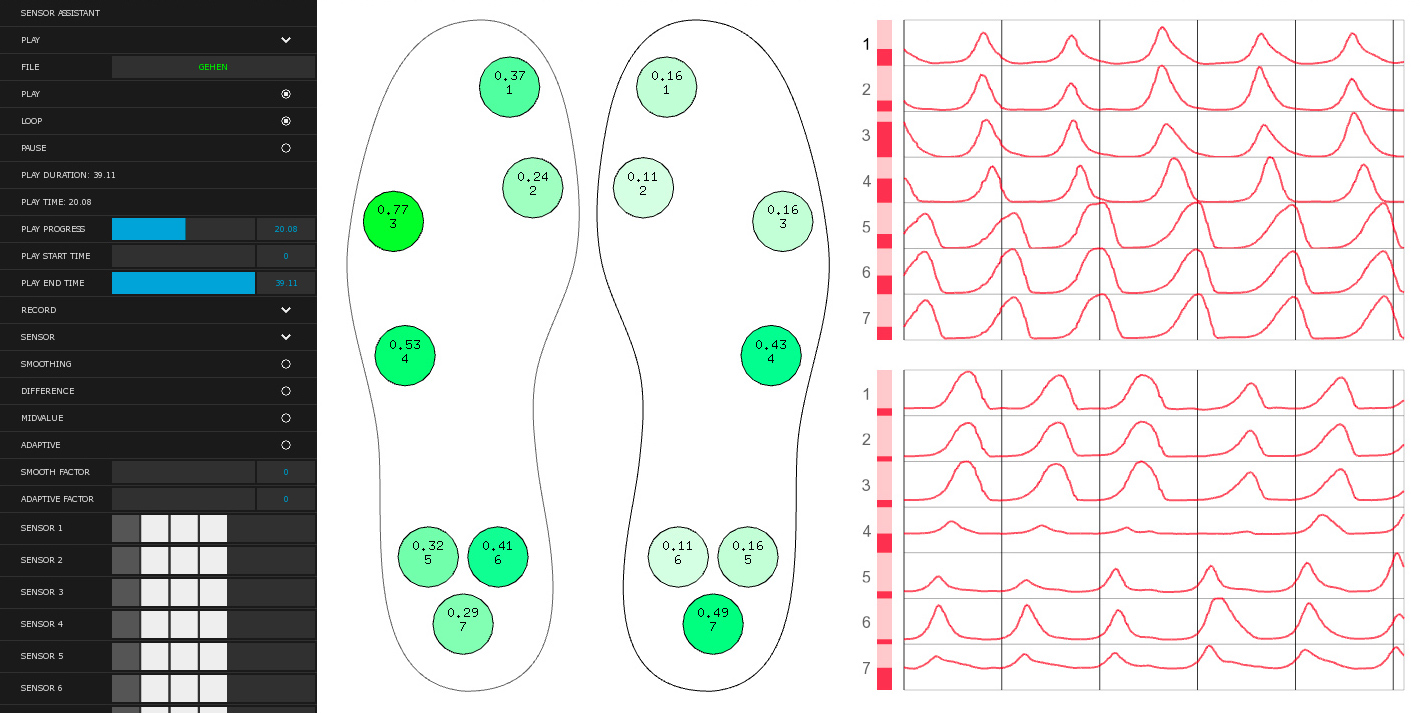

For controlling sound synthesis a simple parameter mapping scheme is employed. Either each individual force sensor value or all force sensors values of one shoe combined are mapped on synthesis parameters. The first approach aids in the audible distinction of different pressure distributions across each foot whereas the la er part simplifies the distinction between le and right foot activities. Some of the synthesis parameters used for mapping are: vibrato, tremolo, formant frequencies, formant bandwidths, and resonant frequencies. The mapping also controls audio spatialisation in an octophonic speaker array where the directionality of the sonic output controlled by each sensor corresponds to the relative location of the sensor with respect to the center of the shoe inlay.